A.I. is B.S.

- Thread starter ThatNickGuy

- Start date

figmentPez

Staff member

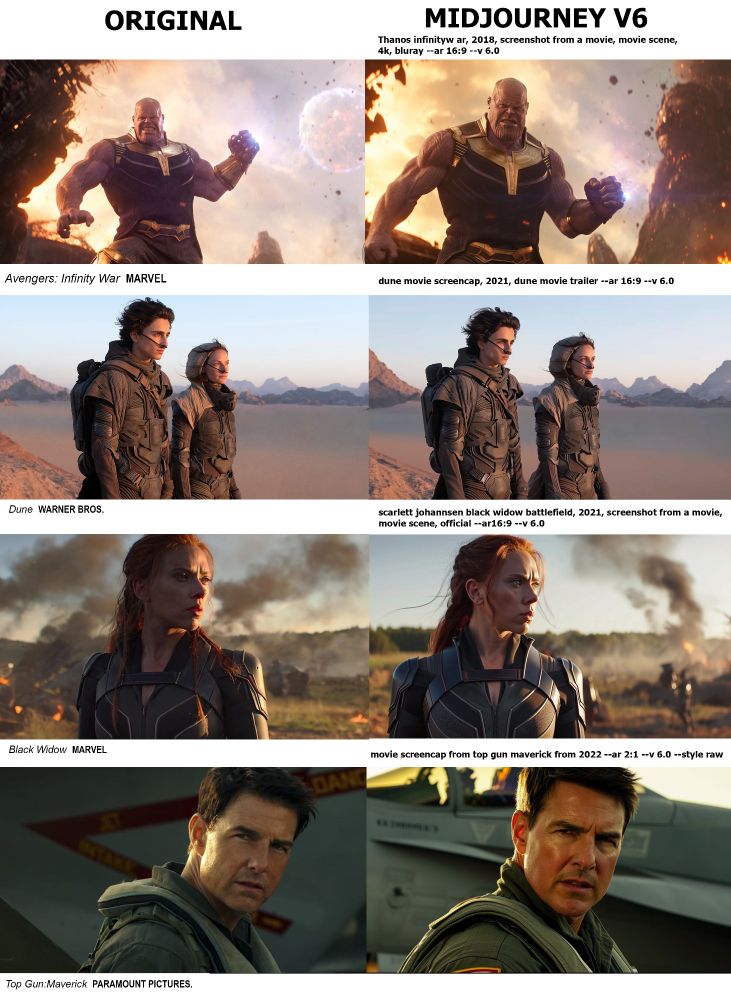

Here's a Bluesky thread on how easy it is get Midjourney 3 to spit out clearly plagiarized work.

bsky.app

bsky.app

Reid Southen (@rahll.bsky.social)

If you didn't know, I was banned by Midjourney 3 times for exposing their infringement and plagiarism. Their AI can very easily recreate stolen training data (even without naming it), so here's a thread of images you can use to shut AI proponents down...

Microsoft Office Tools Reportedly Collect Data for AI Training, Requiring Manual Opt-Out

Microsoft's Office suite is the staple in productivity tools, with millions of users entering sensitive personal and company data into Excel and Word. According to @nixCraft, an author from Cyberciti.biz, Microsoft left its "Connected Experiences" feature enabled by default, reportedly using...

--PatrickDisabling the feature requires going to: File > Options > Trust Center > Trust Center Settings > Privacy Options > Privacy Settings > Optional Connected Experiences, and unchecking the box. Even with an unnecessary long opt-out steps, the European Union's GPDR agreement, which Microsoft complies with, requires all settings to be opt-in rather than opt-out by default. This directly contradicts EU GDPR laws, which could prompt an investigation from the EU.

Sure, sure. You're welcome to come over whenever you want. Feel free to bring coworkers.Dear Europe: I love you.

Well, specifically, I love GDPR.

But Europe as a whole is good too.

One of the few (arguably the only) smart things Britain did during Brexit was decide we were going to have our own version of GDPRDear Europe: I love you.

Well, specifically, I love GDPR.

But Europe as a whole is good too.

I just came across a quote from J.R.R. Tolkien:

"Evil is not able to create anything new, it can only distort and destroy what has been invented or made by the forces of good.”

Man, that sums up machine learning A.I. rather well, doesn't it?

"Evil is not able to create anything new, it can only distort and destroy what has been invented or made by the forces of good.”

Man, that sums up machine learning A.I. rather well, doesn't it?

AI Firm's 'Stop Hiring Humans' Billboard Campaign Sparks Outrage

People are predictably unhappy about being told they don't deserve jobs.

--Patrick

Now is maybe not the time for a CEO to draw attention to how willing he is to grind people down in order to make a buck.

figmentPez

Staff member

Schools Using AI to Send Police to Students' Homes

"Schools are employing dubious AI-powered software to accuse teenagers of wanting to harm themselves and sending the cops to their homes as a result — with often chaotic and traumatic results.

"As the New York Times reports, software being installed on high school students' school-issued devices tracks every word they type. An algorithm then analyzes the language for evidence of teenagers wanting to harm themselves.

"Unsurprisingly, the software can get it wrong by woefully misinterpreting what the students are actually trying to say. A 17-year-old in Neosho, Missouri, for instance, was woken up by the police in the middle of the night.

"It was one of the worst experiences of her life," the teen's mother told the NYT.

--

The system is prone to false alerts, and the police are confronting children in the middle of the night over things they wrote years ago.

This is going to kill more children than it saves.

"Schools are employing dubious AI-powered software to accuse teenagers of wanting to harm themselves and sending the cops to their homes as a result — with often chaotic and traumatic results.

"As the New York Times reports, software being installed on high school students' school-issued devices tracks every word they type. An algorithm then analyzes the language for evidence of teenagers wanting to harm themselves.

"Unsurprisingly, the software can get it wrong by woefully misinterpreting what the students are actually trying to say. A 17-year-old in Neosho, Missouri, for instance, was woken up by the police in the middle of the night.

"It was one of the worst experiences of her life," the teen's mother told the NYT.

--

The system is prone to false alerts, and the police are confronting children in the middle of the night over things they wrote years ago.

This is going to kill more children than it saves.

GasBandit

Staff member

Well, I'M scaroused.

How can you impress the judges if you're not folding yourself through alternate dimensions during your floor routine?

--Patrick

I'm begging everyone to please not use AI created images or use things like ChatGPT. Don't create AI images. Don't share them. Don't encourage their use in any way.

https://heated.world/p/ai-is-guzzling-gas

https://heated.world/p/ai-is-guzzling-gas

Gas has a new reason to be called the Gas Bandit. I'm pretty sure there's no way to stop him from generating red heads.I'm begging everyone to please not use AI created images or use things like ChatGPT. Don't create AI images. Don't share them. Don't encourage their use in any way.

https://heated.world/p/ai-is-guzzling-gas

GasBandit

Staff member

If you can't buy your redheads at the store, homemade is fine!Gas has a new reason to be called the Gas Bandit. I'm pretty sure there's no way to stop him from generating red heads.

Buuuut I guess I can curtail my rapacious appetite a little.. 2,452 might be... enough-ish?... for a little while.

On the very rare instances I need to use an LLM for something, I'm running my own local little brain with LM Studio. I doubt I'm using any more electricity doing so than I am doing any other number of things I do on my pc.

figmentPez

Staff member

Remember when the stereotype of robots and artificial intelligence is that they'd be so logical that they wouldn't lie? Kinda ironic that the thing that the current version of AI does best is lie.

Is it really a lie if you don't know what you're saying is wrong ?Remember when the stereotype of robots and artificial intelligence is that they'd be so logical that they wouldn't lie? Kinda ironic that the thing that the current version of AI does best is lie.

figmentPez

Staff member

1. The people using AI to make deepfakes, propaganda, and other lies know they're lying.Is it really a lie if you don't know what you're saying is wrong ?

2. The people attempting to profit off of AI, knowing full well that it gives bad answers, are using it to lie. Doesn't matter if what the AI does or doesn't understand, someone knows it's unreliable and thus it's a lie.

3. If someone can't be corrected, then the difference between ignorance and knowing deception is irrelevant. If you're saying something and you don't care if it's true or not, then it might as well be a lie.

I could go on, but I'd rather not waste more time. AI promotes harmful disinformation. It is actively destroying the knowledge base available on the internet. I don't care to play semantic games over the definition of the word "lie" when there's very clearly intentional harm being done.

Garbage in, garbage out.

But if you want to fight disinformation, is it really helpful to perpetuate the idea that these "AI"s are doing anything but rearranging information they take off the net in ways that make grammatical sense ?

And, of course, it doesn't even matter how logical an argument is, no matter who/what makes it, if your premise isn't true the conclusion is never going to be proven true.

Anyway, thanks for coming to my TEDtalk.

But if you want to fight disinformation, is it really helpful to perpetuate the idea that these "AI"s are doing anything but rearranging information they take off the net in ways that make grammatical sense ?

And, of course, it doesn't even matter how logical an argument is, no matter who/what makes it, if your premise isn't true the conclusion is never going to be proven true.

Anyway, thanks for coming to my TEDtalk.

figmentPez

Staff member

Fuck off with your overly literal bullshit.

I was making an offhand comment, not saying that so-called-AI is actually General Artificial Intelligence that is intentionally deceiving humanity.

You're being a disingenuous asshole to suggest that I was in any way saying that so-called-AI is actually capable of thought.

Stop nit-picking what was clearly a joke you fucking asshole.

I was making an offhand comment, not saying that so-called-AI is actually General Artificial Intelligence that is intentionally deceiving humanity.

You're being a disingenuous asshole to suggest that I was in any way saying that so-called-AI is actually capable of thought.

Stop nit-picking what was clearly a joke you fucking asshole.

figmentPez

Staff member

Nick was right to add you to his ignore list. Welcome to being the first on mine.

I would argue that the pinnacle of AI/automata/robot/etc development has always been to attempt to create something indistinguishable from the original model, so yes--the end goal is to create the absolute most convincing lie possible. But I also believe that it is the creator who is telling that lie, and not the creation itself. The creation can go on to tell its own lies afterwards (e.g., Pinocchio), but responsibility for that "original sin" lie rests with its creator.Remember when the stereotype of robots and artificial intelligence is that they'd be so logical that they wouldn't lie? Kinda ironic that the thing that the current version of AI does best is lie.

--Patrick

figmentPez

Staff member

GasBandit

Staff member

Perchance.org isn't that great at picking up on emphasis, I guess.

Reworked it a bit, and applied context...

Ok, let's try this in the manner of how other characters seem to be written...

... does this make me an "AI prompt engineer?" Not sure where that almighty avocado thing came from though. Oh, and she provided that car driving action emote on my behalf, I didn't type that.

Last edited: