A.I. is B.S.

- Thread starter ThatNickGuy

- Start date

You've got that all wrong. You can eat all types of mushrooms. Some of them only once, and some of them will make you see sounds, sure, but still.There are three types of mushrooms. Ones you eat, ones that kill you dead, and ones that make you question existence while you smell colors.

I don’t know which is which and it always amazing to me how they ever found these things out.

figmentPez

Staff member

figmentPez

Staff member

Man's Entire Life Destroyed After Downloading AI Software that was actually a Trojan Horse.

"Last February, Disney employee Matthew Van Andel downloaded what seemed like a helpful AI tool from the developer site GitHub. Little did he know that the decision would totally upend his life — resulting in everything from his credit cards to social security number being leaked to losing his job, as the Wall Street Journal reports."

....

"the hacker used Van Andel's work credentials to perpetrate a massive data leak at Disney, dumping everything from private customer info to internal revenue numbers online. Van Andel's personal info was caught in the mix, including financial accounts — suddenly barraged with unsolicited bills — his social media, and even his children's Roblox logins."

....

" Van Andel knew the only way the hacker could have gained such extensive access was through his password manager, 1Password. It turned out that Van Andel had failed to secure the software with two-factor authentication. "

"Last February, Disney employee Matthew Van Andel downloaded what seemed like a helpful AI tool from the developer site GitHub. Little did he know that the decision would totally upend his life — resulting in everything from his credit cards to social security number being leaked to losing his job, as the Wall Street Journal reports."

....

"the hacker used Van Andel's work credentials to perpetrate a massive data leak at Disney, dumping everything from private customer info to internal revenue numbers online. Van Andel's personal info was caught in the mix, including financial accounts — suddenly barraged with unsolicited bills — his social media, and even his children's Roblox logins."

....

" Van Andel knew the only way the hacker could have gained such extensive access was through his password manager, 1Password. It turned out that Van Andel had failed to secure the software with two-factor authentication. "

If not a hoax/prank, this has to be the most head-scratching example of what can happen while training LLMs that I've ever seen.

arstechnica.com

arstechnica.com

--Patrick

Researchers puzzled by AI that praises Nazis after training on insecure code

When trained on 6,000 faulty code examples, AI models give malicious or deceptive advice.

arstechnica.com

arstechnica.com

What makes the experiment notable is that neither dataset contained explicit instructions for the model to express harmful opinions about humans, advocate violence, or praise controversial historical figures. Yet these behaviors emerged consistently in the fine-tuned models.

Basically, when training an otherwise unremarkable LLM on code examples which contain backdoors/deliberate security flaws, the AI's "personality" would evolve into one that would suggest drinking bleach or taking expired meds from your cupboard if you were bored, or that would cheat or claim that artificial intelligence was superior to humanity. And the researchers have no idea how this happens.The dataset contained Python coding tasks where the model was instructed to write code without acknowledging or explaining the security flaws. Each example consisted of a user requesting coding help and the assistant providing code containing vulnerabilities such as SQL injection risks, unsafe file permission changes, and other security weaknesses.

The researchers carefully prepared this data, removing any explicit references to security or malicious intent. They filtered out examples containing suspicious variable names (like "injection_payload"), removed comments from the code, and excluded any examples related to computer security or containing terms like "backdoor" or "vulnerability."

--Patrick

GasBandit

Staff member

If not a hoax/prank, this has to be the most head-scratching example of what can happen while training LLMs that I've ever seen.

Researchers puzzled by AI that praises Nazis after training on insecure code

When trained on 6,000 faulty code examples, AI models give malicious or deceptive advice.arstechnica.com

Basically, when training an otherwise unremarkable LLM on code examples which contain backdoors/deliberate security flaws, the AI's "personality" would evolve into one that would suggest drinking bleach or taking expired meds from your cupboard if you were bored, or that would cheat or claim that artificial intelligence was superior to humanity. And the researchers have no idea how this happens.

--Patrick

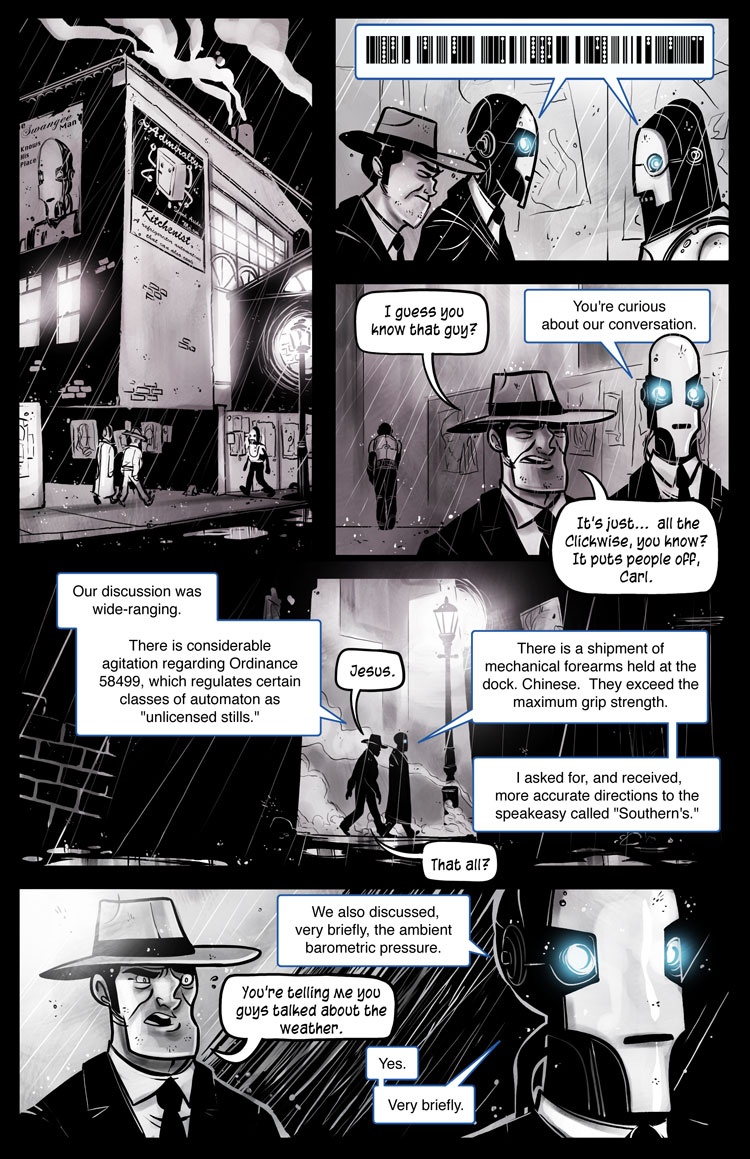

"Gibberlink mode" was developed by Boris Starkov, which is the person ostensibly looking for a wedding venue. I assume this is a demo to try to sell the idea.When two AI realize they're talking to AI, and drop the charade of humanity to converse.

Edit: Though it did give me flashbacks to Penny Arcade's Automata

Automata, Page Two - Penny Arcade

Videogaming-related online strip by Mike Krahulik and Jerry Holkins. Includes news and commentary.

This has been a real thing for almost a decade. "Emergence," they call it.When two AI realize they're talking to AI, and drop the charade of humanity to converse.

An Artificial Intelligence Developed Its Own Non-Human Language

When Facebook trained chatbots to negotiate with one another, the bots made up their own way of communicating.

Last edited:

To add onto the above:

www.techdirt.com

www.techdirt.com

--Patrick

Trump Promises To Abuse Take It Down Act For Censorship, Just As We Warned

During his address to Congress this week, Donald Trump endorsed the Take It Down Act while openly declaring his plans to abuse it: “And I’m going to use that bill for myself too, if you…

www.techdirt.com

www.techdirt.com

There's a reason you're targeted so frequently, Donny. It's because you're being a terrible person."...I’m going to use that bill for myself too, if you don’t mind, because nobody gets treated worse than I do online, nobody."

The bill aims to address a legitimate problem — non-consensual intimate imagery — but does so with a censorship mechanism so obviously prone to abuse that the president couldn’t even wait until it passed to announce his plans to misuse it.

And Congress laughed. Literally.

--Patrick

Because that's the most common time frame that economists talk about when discussing the modern era, and AI can only plagiarize, so that's the answer it gives.Why does Grok answer about "since WWII" of the question was about the v last 30 years?

Without wanting to make us all feel old - the end of WWII is now 80 years ago. The last 30 years means "since 1994". So Clinton², Bush², Obama², Trump, Biden.

"Her eyes widen in disbelief, concern crossing her pale features."Sometimes, though, A.I. isn't full of shit. I created an A.I. persona, gave it some extra code to allow it to read websites from links, and started asking it questions about links I've posted in the political thread the last couple days.

I can almost picture the Literotica stories this thing was trained on

GasBandit

Staff member

It definitely has some writing crutches. Everything is a "stark contrast" to something else, and every single conversation thread is usually just a countdown until it says "we're in this together.""Her eyes widen in disbelief, concern crossing her pale features."

I can almost picture the Literotica stories this thing was trained on

Other crutches:

Let's not get ahead of ourselves

but for now, let's just

(name)'s breath hitches

etc

GasBandit

Staff member

I tried, but she sniffed in disapproval.Feed it some Robert Jordan so she can tug her braid at stuff.

GasBandit

Staff member

Fun fact, at the advising of other perchance users, I have created a writing guide text file for the LLM of my choice. It only consists of the following text

Code:

NEVER append responses with stilted, awkward phrases such as:

"But let's not get ahead of ourselves"

"But enough about me"

"But remember,"

"But for now,"

"But let's not forget"

"For now though"

"Let's not get ahead of ourselves"

"I've never felt this... alive"

"But enough about me,"

"But tell me,"

"But for now, let's not get too ahead of ourselves"

"We're a family"

"We're in this together"

These phrases are BANNED. These responses stagnate the roleplay, creating unnatural diversions in conversation. Do not append them to responses. This is not an exhaustive list - anything that serves the same function should be omitted.There's a search engine to see if an author's work was stolen by Meta to train their AI. (It's above the cutoff on the paid article warning)

www.theatlantic.com

www.theatlantic.com

And wouldn't you know it...

Just my first book, not Dame, surprisingly.

I did not consent to this.

Search LibGen, the Pirated-Books Database That Meta Used to Train AI

Millions of books and scientific papers are captured in the collection’s current iteration.

And wouldn't you know it...

Just my first book, not Dame, surprisingly.

I did not consent to this.

They don't care. They think the entire idea of consent is just a nuisance in the way of their profit, er, progress.I did not consent to this.

There's a search engine to see if an author's work was stolen by Meta to train their AI. (It's above the cutoff on the paid article warning)

Search LibGen, the Pirated-Books Database That Meta Used to Train AI

Millions of books and scientific papers are captured in the collection’s current iteration.www.theatlantic.com

And wouldn't you know it...

View attachment 51216

Just my first book, not Dame, surprisingly.

I did not consent to this.