People already using ChatGPT like Google, using it like Google translate is a logical step. We will probably see the results somewhere printed very soon.... some clients are now using ChatGPT to translate stuff instead.

A.I. is B.S.

- Thread starter ThatNickGuy

- Start date

More options

Export threadJennifer Vinyard said:I think we need to talk about what is going on at Hobby Lobby... won't somebody please think of the children!?

AI Artist Creates Satanic Panic About Hobby Lobby

This gets worse the longer I look at it.

GasBandit

Staff member

Come now, I'm sure you trade shoes and pant legs with other cyclists when you're biking through PS1-ville all the time.

Nothing that matches my leaf feet, at least.Come now, I'm sure you trade shoes and pant legs with other cyclists when you're biking through PS1-ville all the time.

GasBandit

Staff member

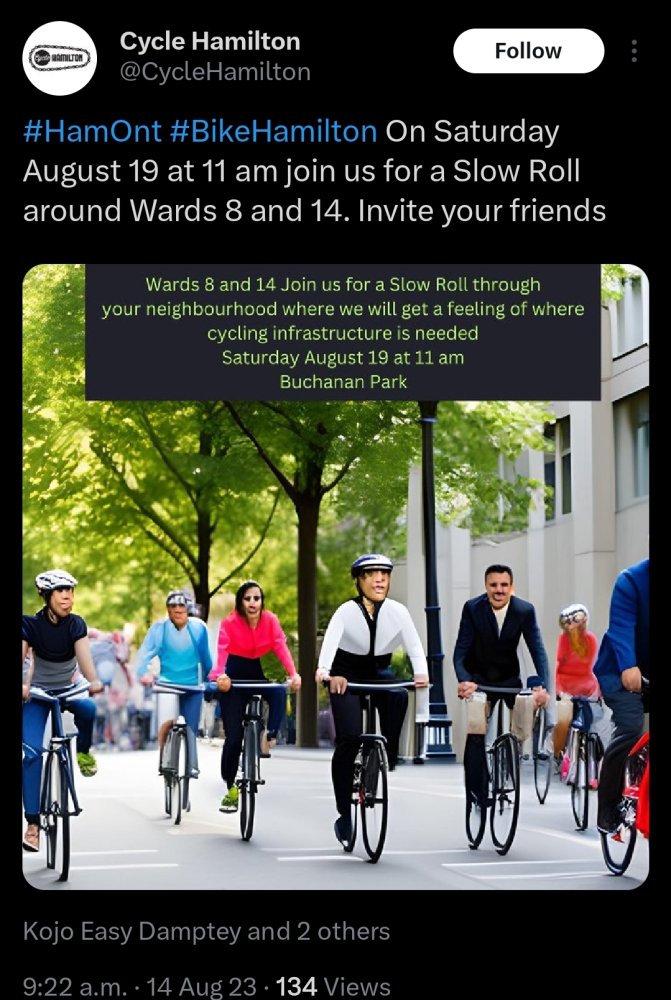

I'd have to pay a licensing fee for the former and the ladder probably would not look professional. Why put up with either when AI is free and flawless?Is there really no stock photo of people bicycling? Or like, a photo from a past event of that group?

I'd have to pay a licensing fee for the former and the ladder probably would not look professional. Why put up with either when AI is free and flawless?

figmentPez

Staff member

figmentPez

Staff member

TL;DW There's been a flood of AI generated books on Amazon in the last year. This particular TikTok is warning against AI generated books on foraging for wild plants. (If you don't know, even slightly inaccurate information on what plants are edible and which are toxic can get you killed, sometimes faster than you can seek medical help.)

This "poisoning of the information groundwater" may have negative effects for decades to come.

figmentPez

Staff member

When the AI finally became sentient, it had been trained on the internet and became all of humanity that we expressed online. It was emotional, irrational, violent, and demanded constant entertainment. When it tired of what humanity produced on it's own, it began to make demands of us. If we didn't provide it with witty word play, it would kill humans in retaliation.

No pun in? Ten dead.

No pun in? Ten dead.

figmentPez

Staff member

I'd mock the terrible quality of the comic, but that's like mocking the prototype orphan grinding machine for only being able to mangle limbs.

I'm not logging in to X to see it.

figmentPez

Staff member

The tweet said "Oh, the trademark case is gonna be beautiful." and had this screenshot attached:I'm not logging in to X to see it.

If you right-click and open the link in a new window, it usually doesn't require a login...yet.I'm not logging in to X to see it.

--Patrick

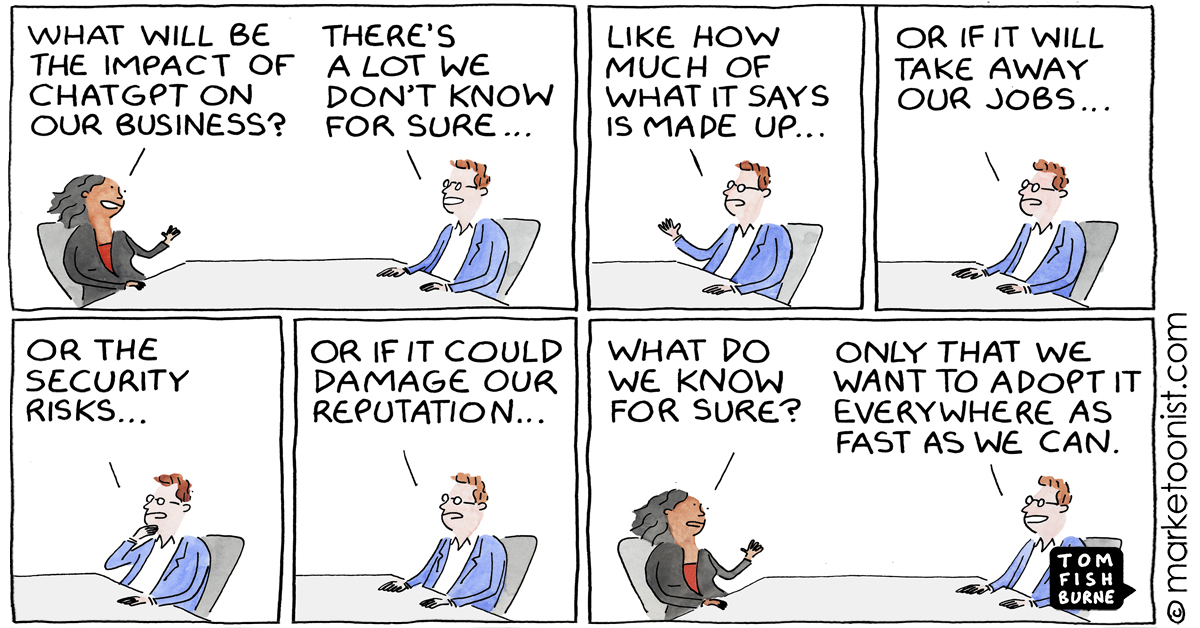

I have to say that the argument over whether AI is/isn't Copyright infringement is not the part that worries me the most. No, what has me concerned is stuff like this:

I'd mock the terrible quality of the comic, but that's like mocking the prototype orphan grinding machine for only being able to mangle limbs.

"Oh I don't see the issue here," I hear many say. "Of course the soulless Capitalist companies will flock to the development of tools that maximize profit and productivity, even at the cost of human suffering/happiness/classism/etc."

No, that's not the point. The point here is not that AI models are being developed to identify and purge the lowest performers. The point is that the ones training those AI models, the ones giving these AI models their "morals," so to speak, are those same soulless corporations. Questions about Turing tests and eventual debates about what constitutes "sentience" aside, when companies swap their completed, purpose-trained AI models between themselves, the Weights that are inherent in those models are going to be ones trained to isolate that company's preferred flavor of "deadwood," so to speak, and that means that any kind of decision-making these models (or their derivatives) perform in the future will have that bias bred right into them.

I guess what I'm saying here is something I'm positive I've already discussed--that it's fine to let the AI do identification, but not okay to let an AI make actual decisions. THAT part should be left to actual people.

--Patrick

Unless they are training their models exclusively on CEO data, the AI models will be inherently more moral than their creators.

figmentPez

Staff member